Microsoft Translation services through SwiftKey keyboard

The OPPORTUNITY

In this world of global citizenship, SwiftKey has a large user base of multilingual users of varying levels and needs.

If you're in the flow of messaging, it’s annoying having to switch apps to translate text for comprehension. Or to switch into a translator app to type something out, check your grammar/spelling, copy the text, reopen your messaging app and paste the text in order to continue the chat.

The solution

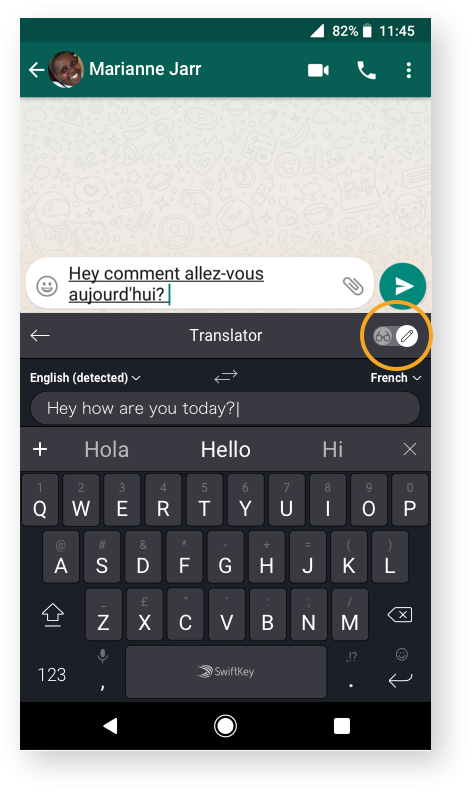

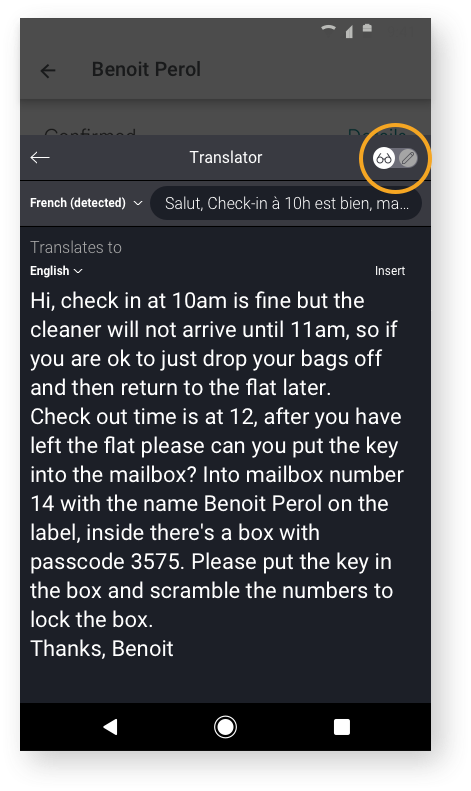

SwiftKey keyboard now integrates Microsoft translator into your keyboard, so that your can type and see inline translations, or quickly translate text to read whilst staying in the context of your composing app.

Role: Lead Designer

Design research

Workshop/design sprint

Journey mapping

Wireframes + user flows

Interaction design

Visual design

Prototypes

Involved in usability testing

Platform

Android

CHALLENGES

Working remotely with a team in Seattle with 9hr time difference

There was no designer on the MS translate team, design had previously been cobbled together by the engineers and PM - no previous usability testing, no asset repository, or explanations for design decisions

The keyboard toolbar, which is now the entry point for this feature, was undergoing a design overhaul at the time and in constant flux, making alignment difficult

Technical feasibility needed to be scoped out before the feature was approved. In turn, this meant tight deadlines when I came on board with the project, in order to not block further development

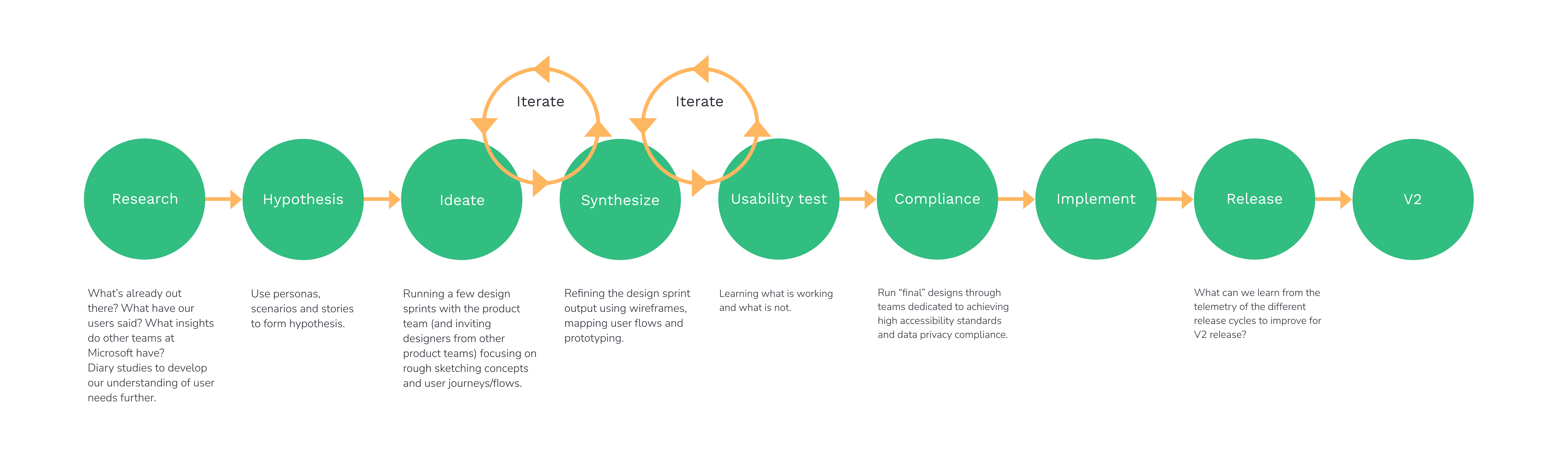

Process

RESEARCH

Audit of Microsoft Translator app

Insights from Microsoft Translator team

Going through Microsoft Translator support tickets

Competitor review

The Design research team sent out surveys and ran diary studies on existing translator users to gain more insight into scenarios and pain points

GOAL DRIVING PERSONAS

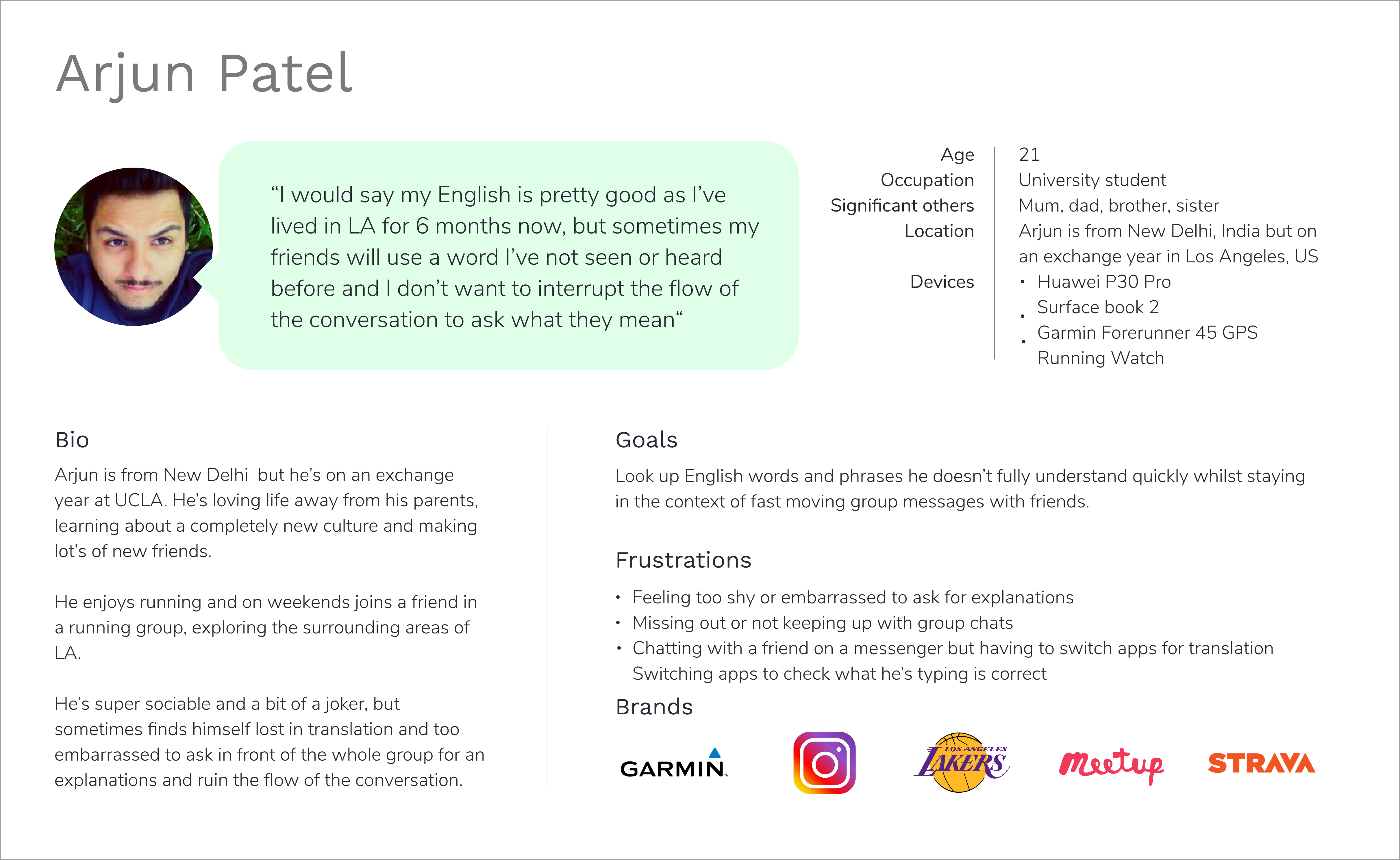

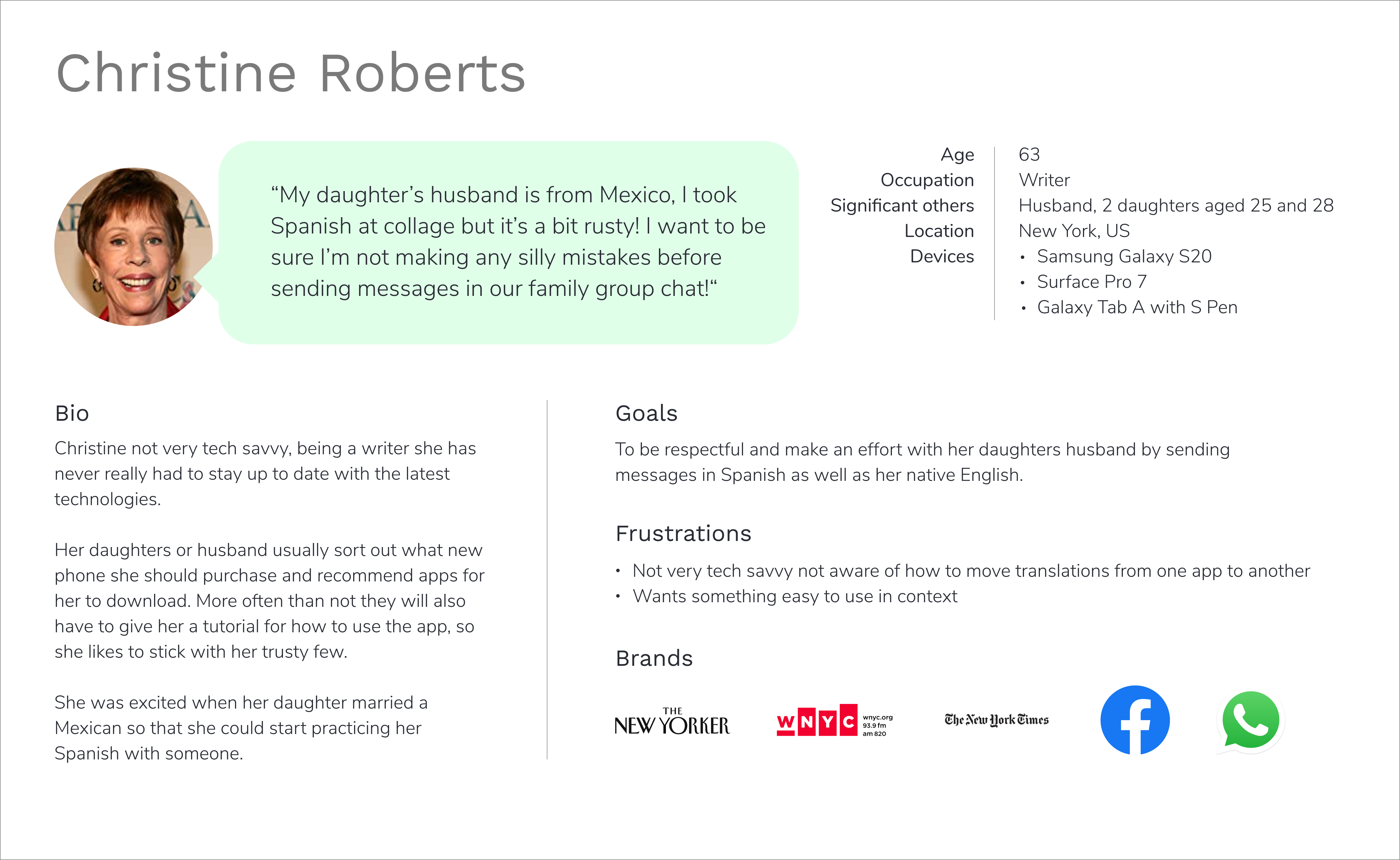

I leveraged work from a separate workstream on SwiftKey personas, together with preliminary studies from our research team and findings from the Microsoft Translation team to come up with a persona that best matched our target audience.

Who this is for...

Users who speak more than one language - but may not be fluent in both/all - who want to use their keyboard to:

- Chat with friends and family in messenger apps

Write more formal/correct language in email form

Check their written studies if they’re studying a language

who this is not optimised for...

We are not a translator app, so this is not about optimising for non-keyboard input translation services, for example:

1. Translating extended mobile content from outside of the keyboard e.g. news articles

2. Using the camera for visual text recognition to translate a dinner menu.

3. Text to speech translations and vice versa

Example Personas

USING PERSONAS AND KEY SCENARIOS

TO MAP INTENT

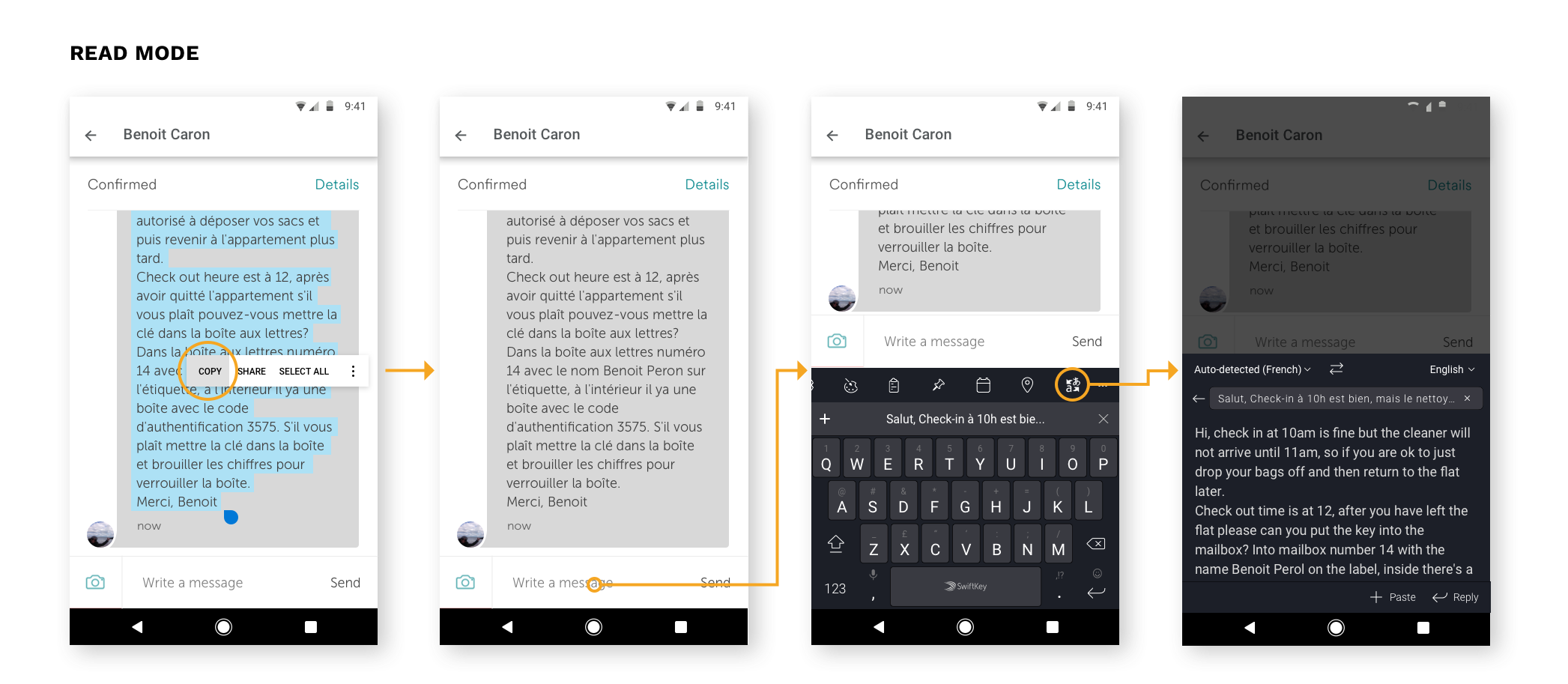

It was clear to see that there were two core user cases: 1) translating to read and comprehend and 2) translating to communicate and write.

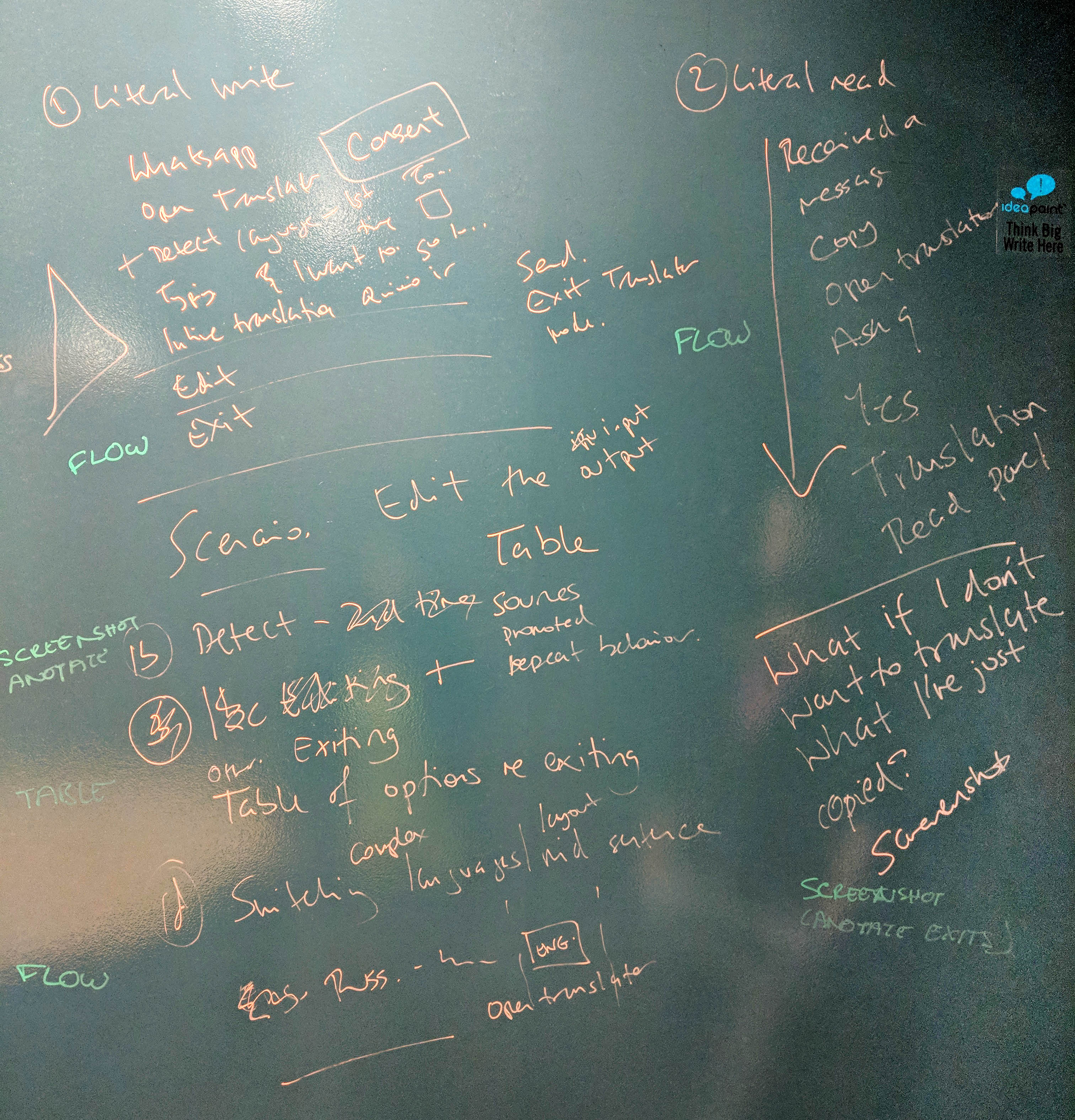

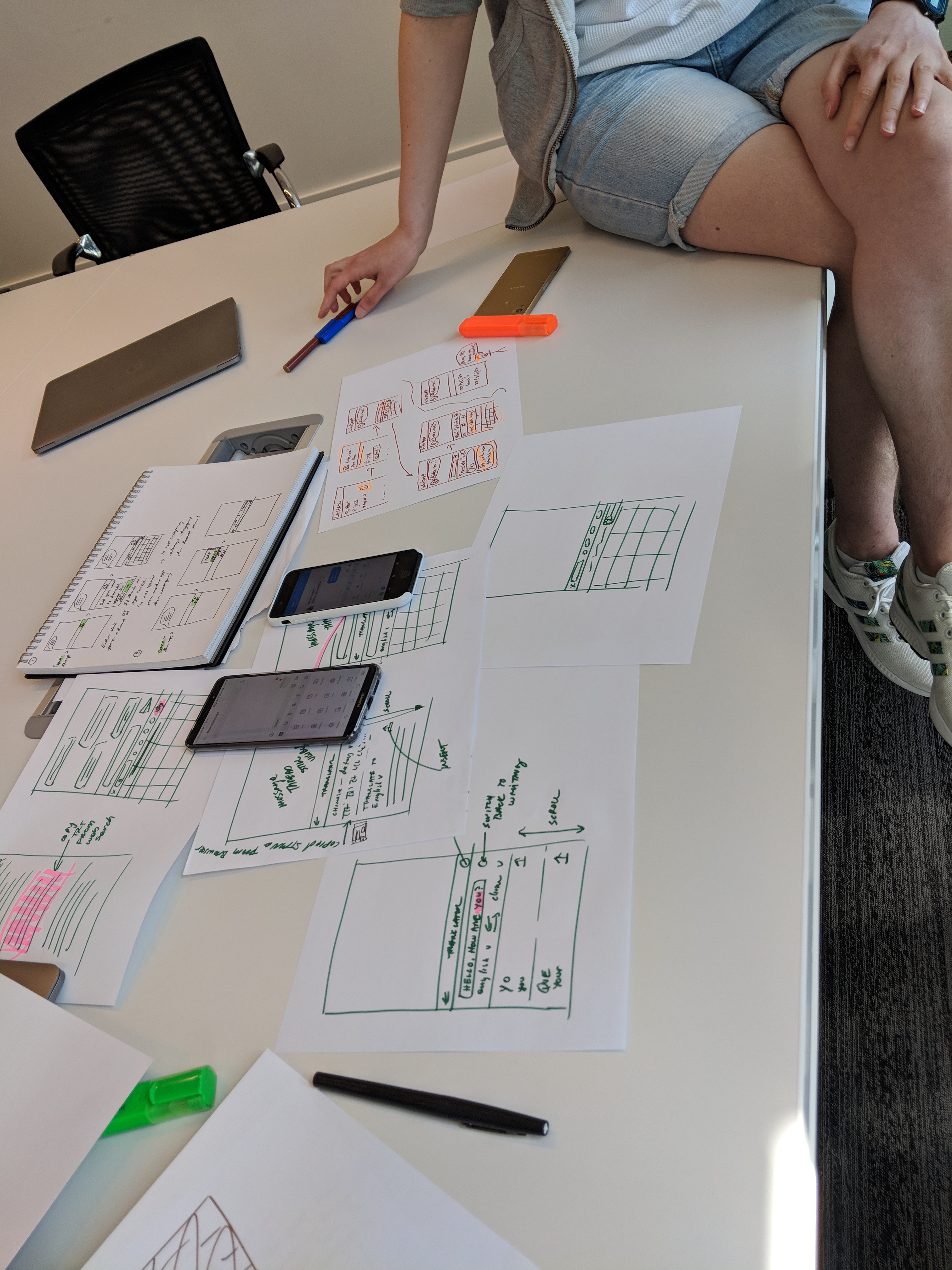

As a team we brainstormed stories and scenarios, breaking them down into simple interactions. It was not only a great way to get more perspectives on the problem, but also for the team to align on what the core tasks were and how they all fit together in a flow.

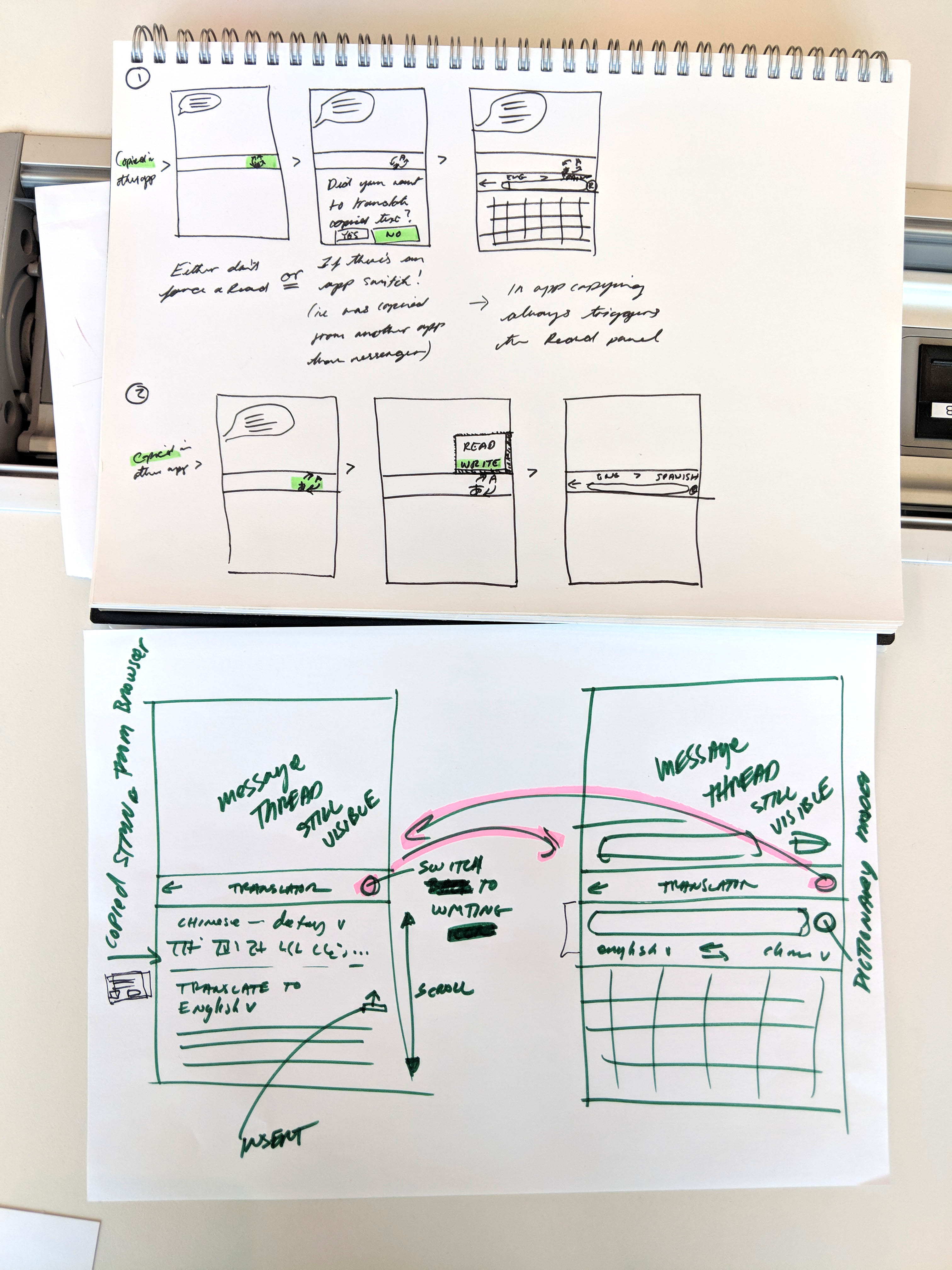

Once I had sorted through the core user tasks and contexts we wanted to focus on, I ran a couple of design sprints with the product team, also inviting designers from other teams who might be interested. In the first sprint, we speed-sketched different possibilities for user journeys and interaction models. It was a great way to quickly generate a lot of different ideas.

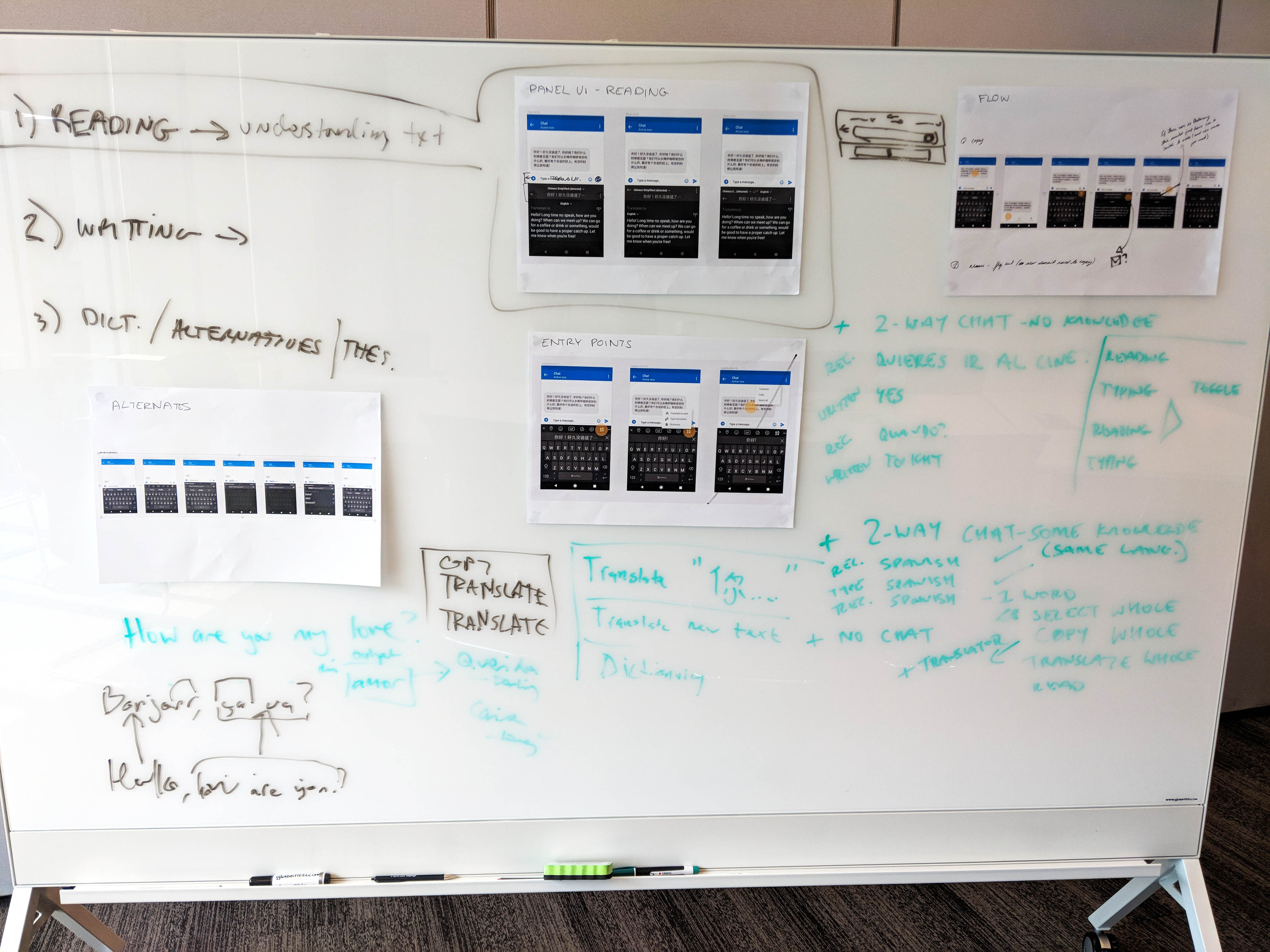

I combined and mocked up different aspects of the output from the first sprint and used this as a basis for the second sprint - to evaluate, develop and stress test the designs in different user flows.

The top two images show the sketches from the first design sprint I ran with the product team.

The image above shows the whiteboard session from the second design sprint, showing how each time the ideas became more refined.

PROTOTYPING

With two completely different user intents, there were so many micro-interactions to think about, so I started by mapping out the user actions for each of the use cases, to get an understanding of different entry points and user states to be considered.

At first, I cautiously gave the user full control over which state they were in: read or write. I prototyped the above toggle concept, which we tested and eventually decided against, preferring to streamline the flow by using trigger moments to invoke the correct read or write state.

USABILITY TESTING

I find that usability testing is usually the most insightful part of the design process. If there are any red flags, you can often get clear, tangible next steps for the design iteration just by observing what the user does.

I worked closely with the user researcher to devise a script and tailored the prototype to validate the assumptions I had made and design direction.

In this case, we found that yes users did find it useful to have a different UI for read and write mode however they didn't notice or see the need for the explicit "mode" change, they just wanted the keyboard to know already.

ITERATE AND REFINE

Knowing when a user wants to translate to read verses translate to write, in order to invoke the correct state, was a tricky problem to solve. So I made a few key assumptions as starting points which were then tested and reiterated upon. Two examples:

Hypothesis 1.

"If a user selects text to copy and immediately taps to use the translator we can assume that they would like to go into “read” mode to paste and translate text for comprehension"

And so by default...

Hypothesis 2.

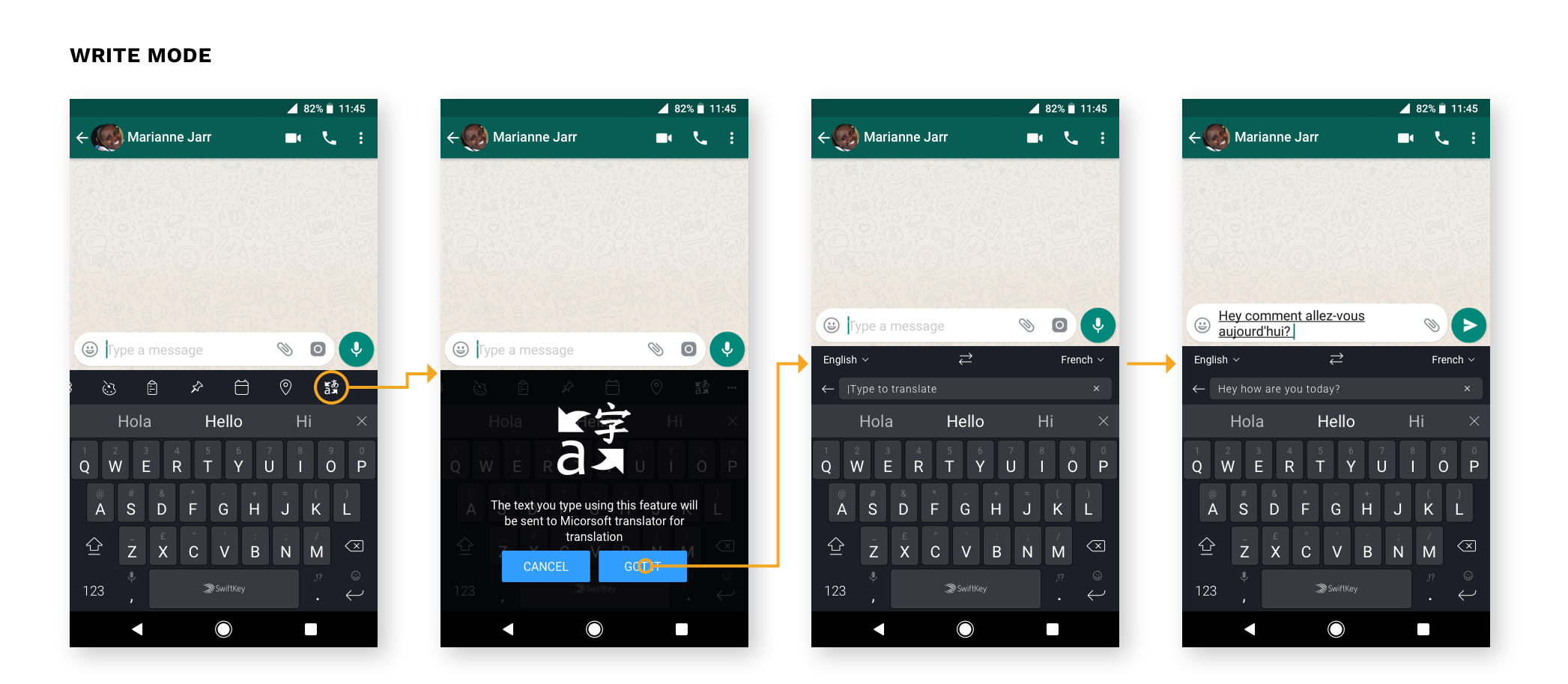

"If a user taps the translator from any other state, where they have not just copied some text, we can assume that they would like to enter “write” mode to translate text for compose"

FLESHING OUT DESIGN

Once core functionality and flow were validated through usability testing, I started to finalise states and scenarios like error states and FRE experience; most notably any consent checkpoints which need to be handled in on-boarding.

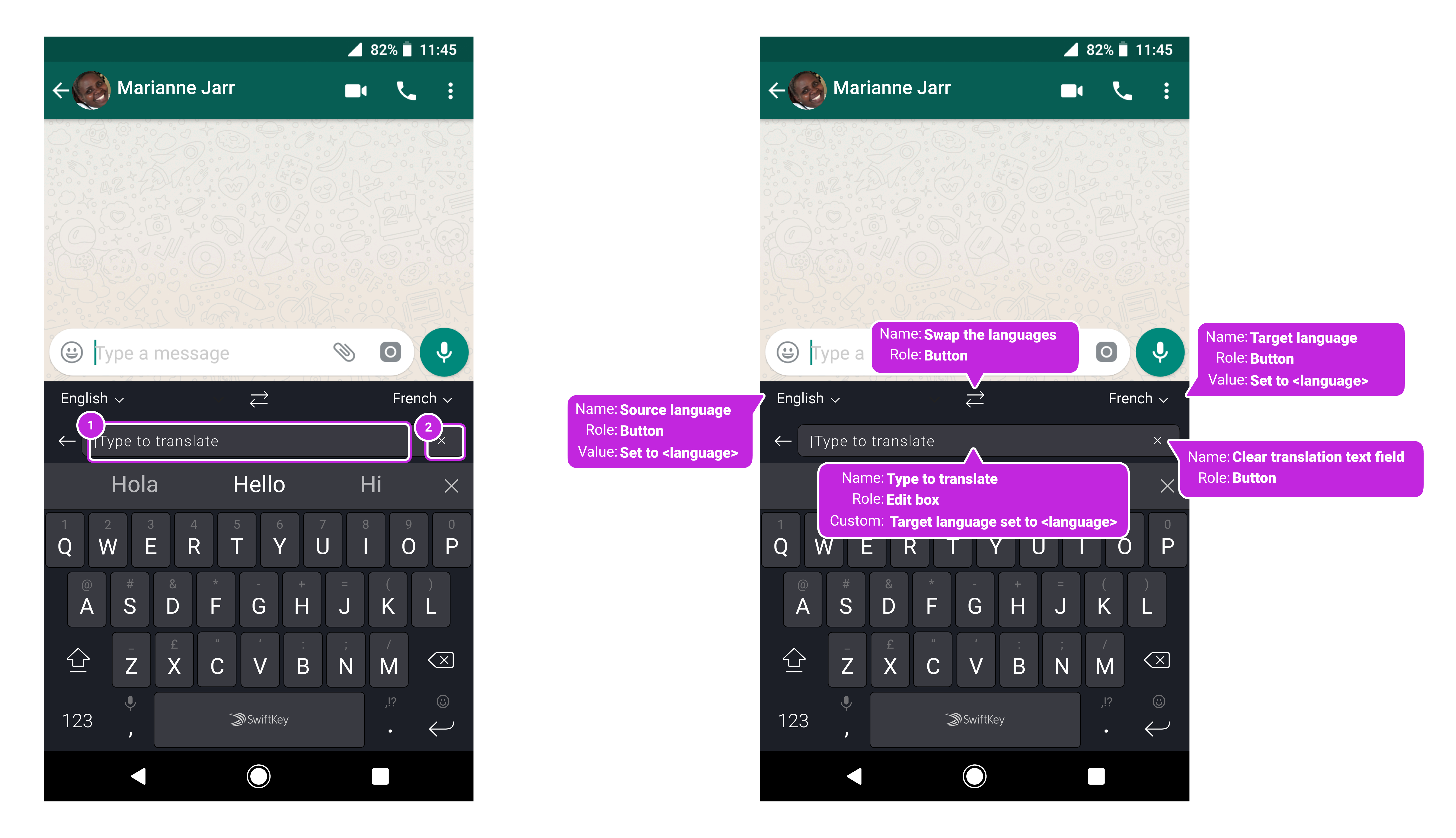

ACCESSIBILITY

It's also worth noting that as the iterations of the designs become increasingly hi-fidelity throughout the iterative test and design cycle, I am constantly taking into consideration accessibility standards, tap points, entry points, error states, theming for contrast, talkback, etc. Below is an example of the accessibility spec I gave to the developers for talk-back instructions.

IMPLEMENTATION

I worked agile with the developers from early on in the process so that they could raise any red flags for technical implementation and there were no surprises when it came to the implementation stage. As with most projects I worked closely and iteratively with them providing all the red lines and assets.

Final flows for each Read and write

PROJECT OUTCOME

Microsoft Translator is now available to use from your SwiftKey Toolbar

Translator for SwiftKey enables you to quickly and easily translate text in over 60 languages without ever leaving your keyboard

- Released in September 2018

- Over 8M monthly active users

Contact

Phone: +44 747 833 7576

Email: hi@kahmunliew.com

KML Design Ltd

71 - 75 Shelton Street

Covent Garden

London

WC2H 9JQ

Copyright © 2022 Kah-Mun Liew | UX and Product Designer